LinkedIn Recommendation Generator

On LinkedIn, users can add concise, public notes to each others’ profiles recommending them to potential employers or clients. Many users may want to use ChatGPT or a similar large language model chatbot to write the recommendation for them, but this particular task is not well-suited for the chatbot interface. I designed a prototype application that provides a better experience to users who want to use a large language model to complete this task.

The issue with chatbots

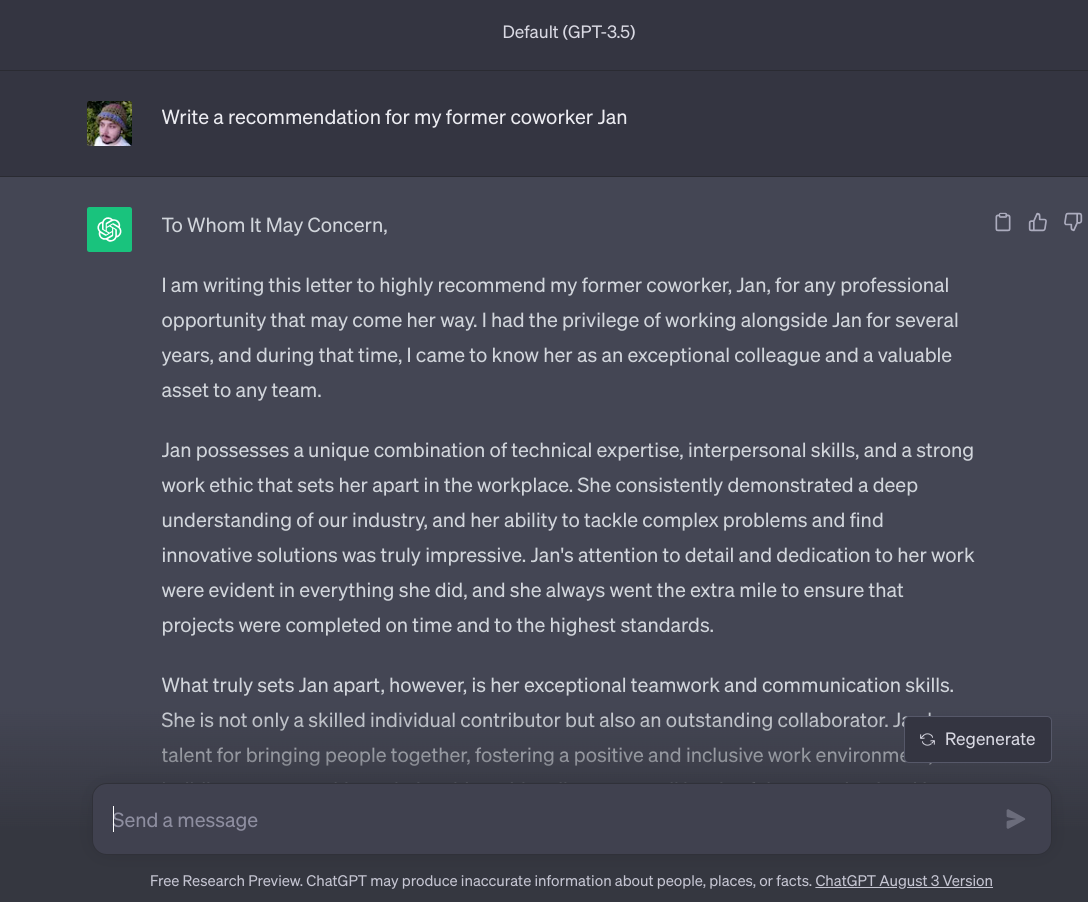

A layperson might start by simply asking the chatbot to write a recommendation without providing any further instruction, but the result is largely unhelpful. It is styled as a formal letter of recommendation more than 300 words long and it is extremely generic.

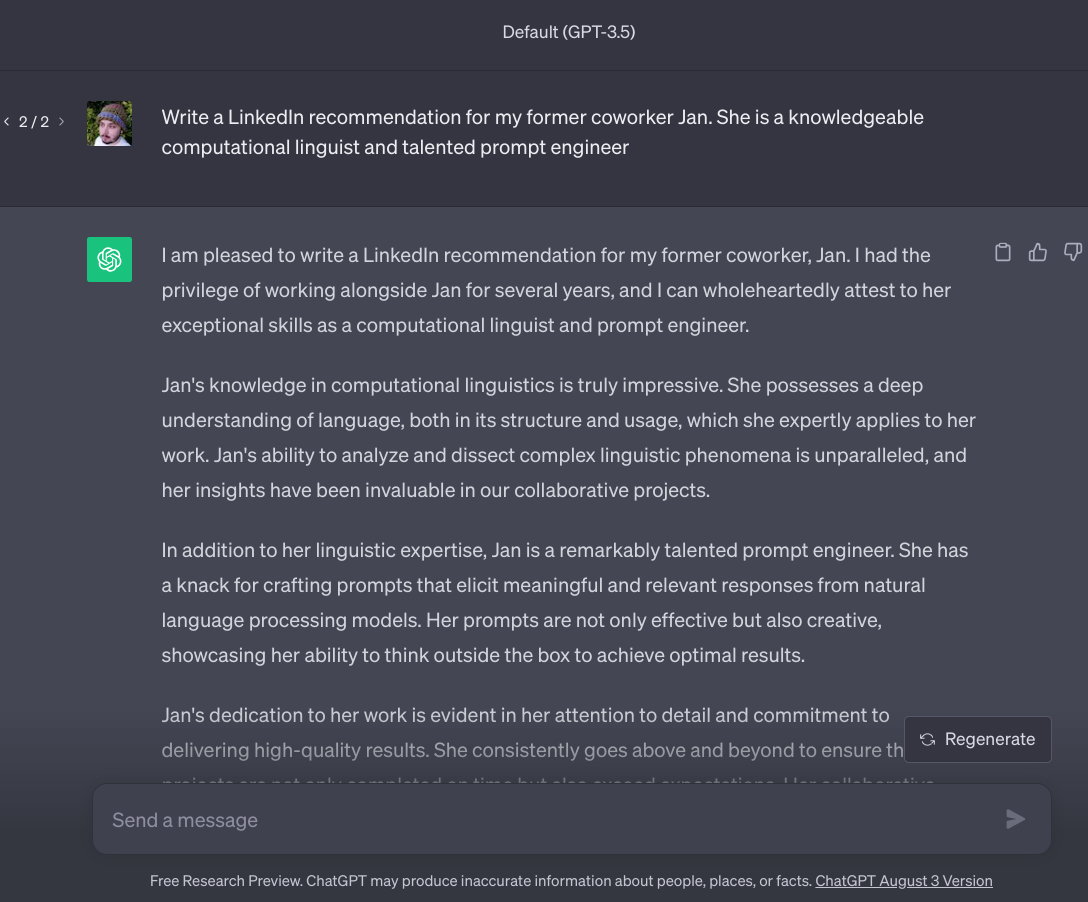

The logical next step would be to add a little detail to the prompt, so I clarify that I want a LinkedIn recommendation and add some information about Jan’s professional expertise. Unfortunately, the result is even worse! The recommendation is no longer styled as a formal letter of recommendation, but it is still way too long and now it is making up how long I have worked with her.

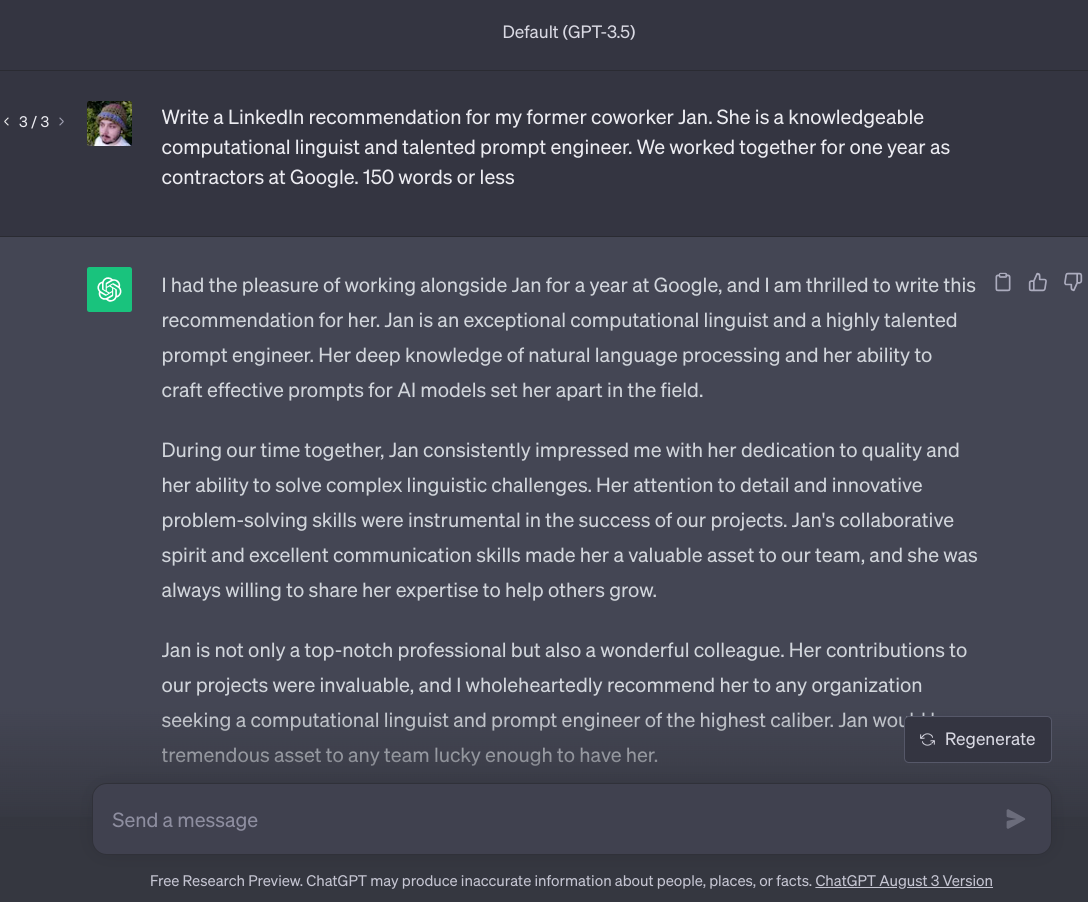

Let’s say I try one more time. I clarify how long I have worked together, and I specify that I want 150 words or less. The result is OK; a little stiff, pretty generic, and a bit too long still.

After three tries, a reasonable user might just assume that this is the best they can get from a large language model. But it isn’t. By reimagining the interface between the user and language model, we can create a less frustrating experience that provides better results.

An alternative solution

The main problem in the example above is that the chatbot interface places the burden of defining the task and identifying the necessary information required to complete that task on the user. Instead of having the user try to prompt the large language model (LLM), I have created a simple application that uses dynamic forms to prompt the user for the necessary information. The application then uses that information to prompt the LLM. Removing direct interaction with the LLM provides a much more predictable, less frustrating user experience.

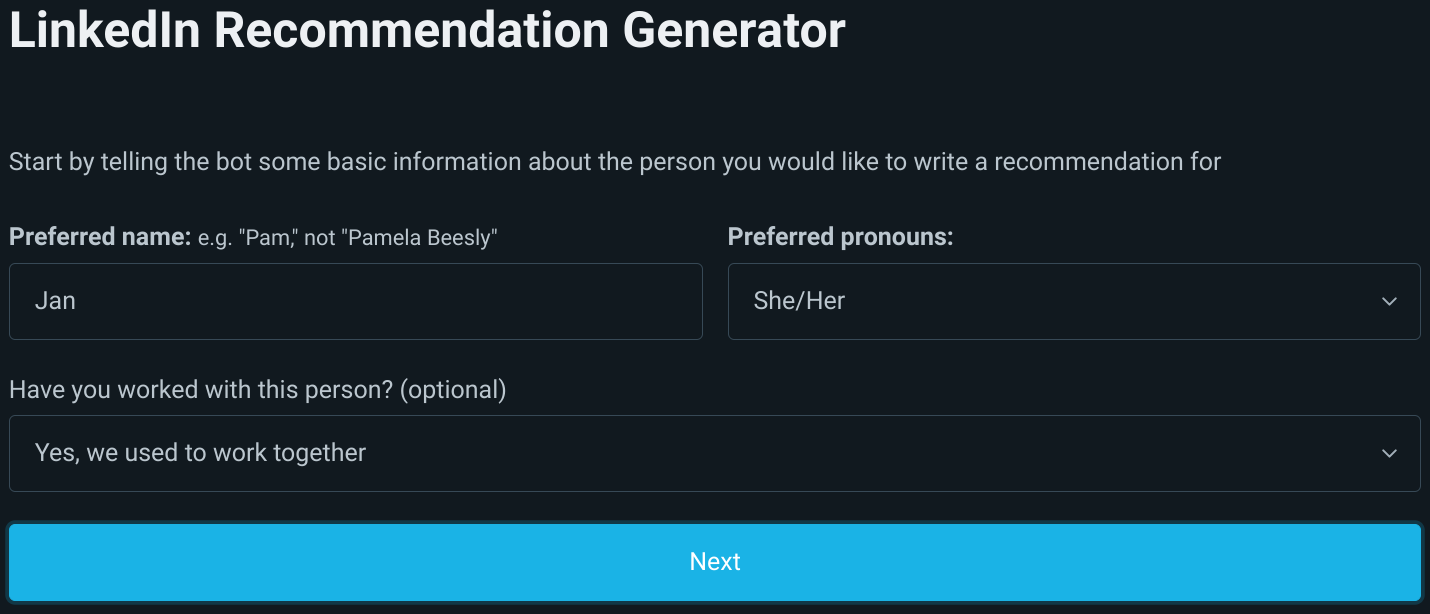

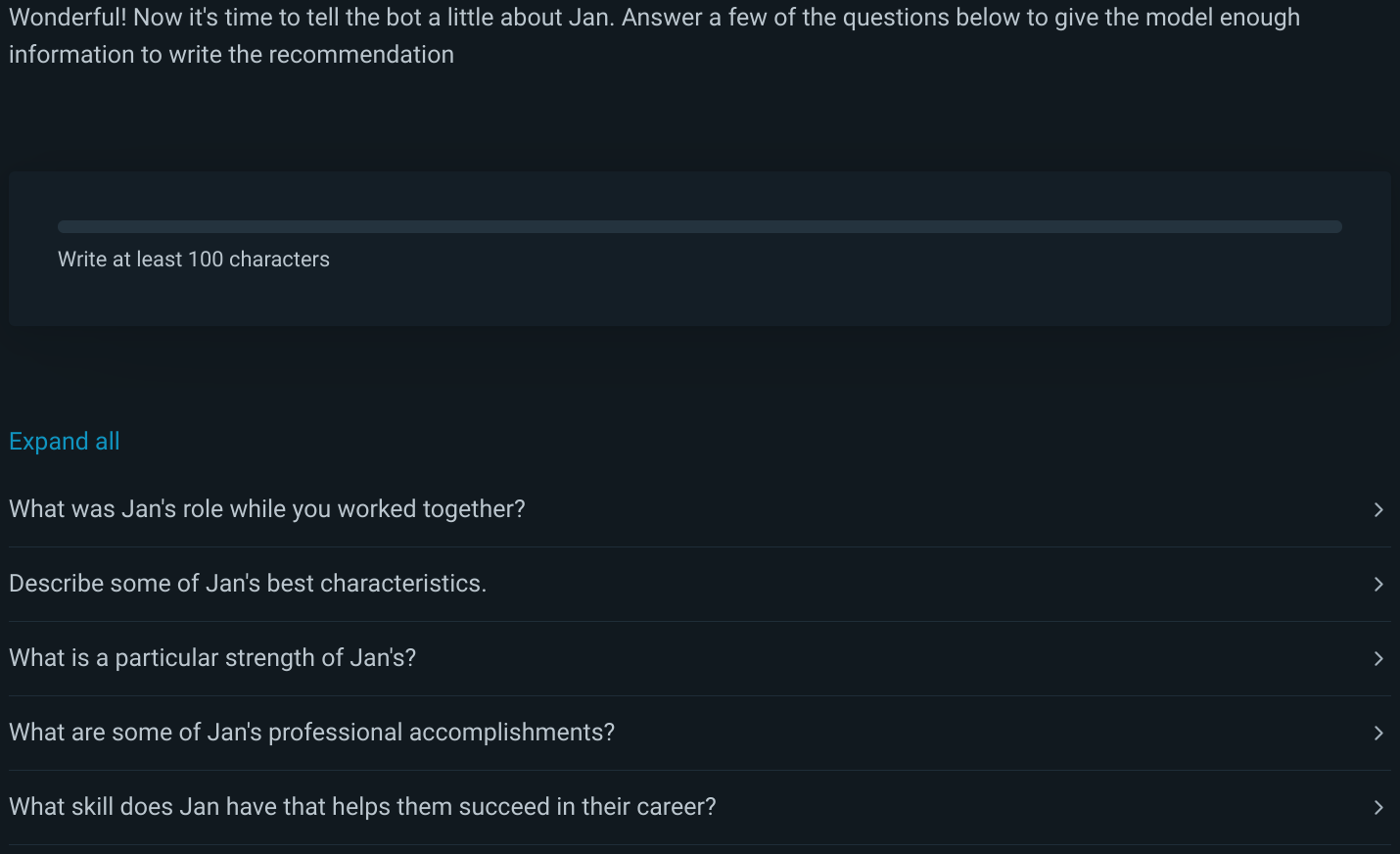

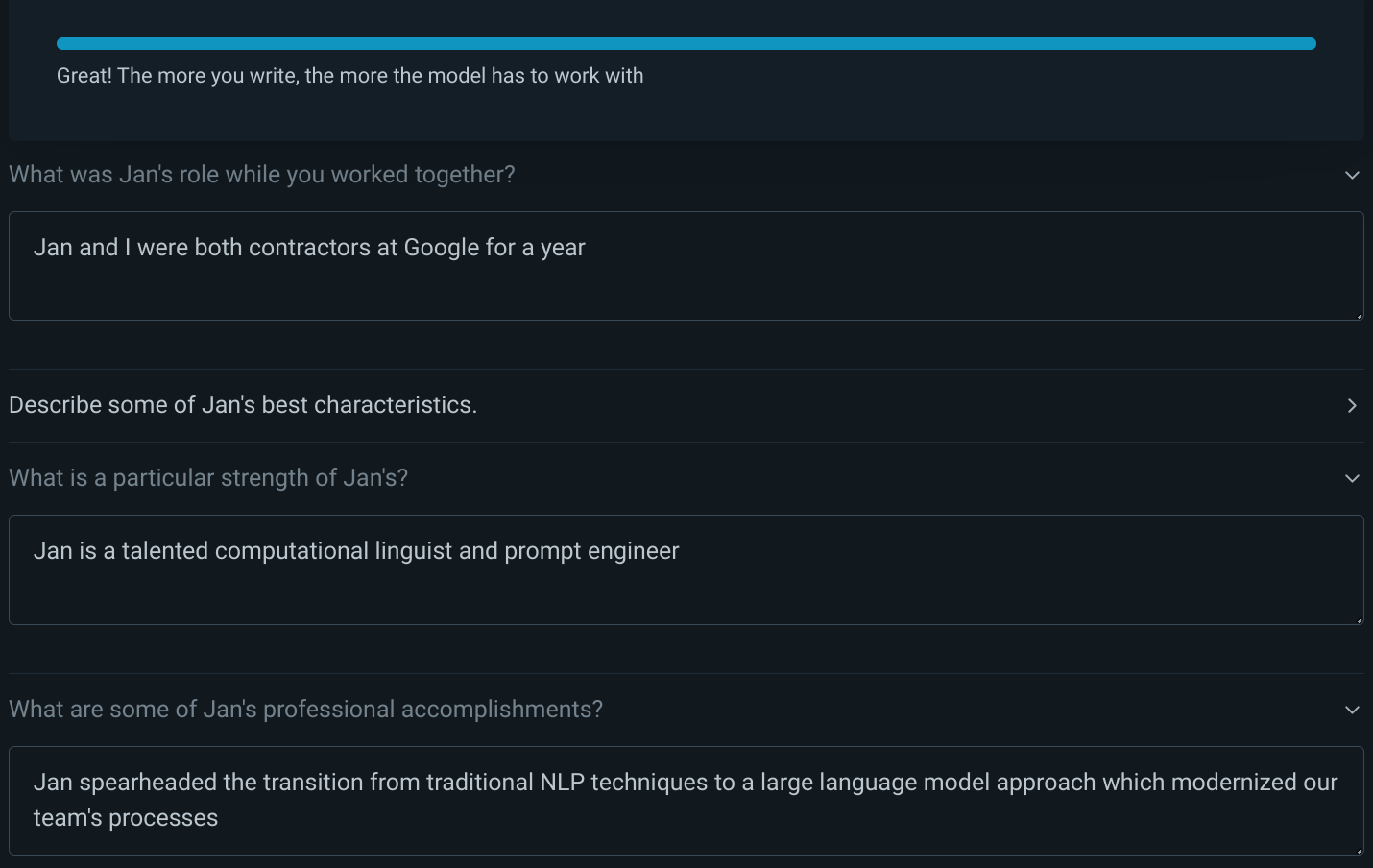

When the user enters the application, they are asked for basic information about the person they are recommending. This information is used in the second step to present the user with a set of thought-provoking free-response questions.

The user is instructed to answer 2-5 of the free-response questions. The answers they provide, as well as which questions they choose to answer, inform the content and style of the final recommendation.

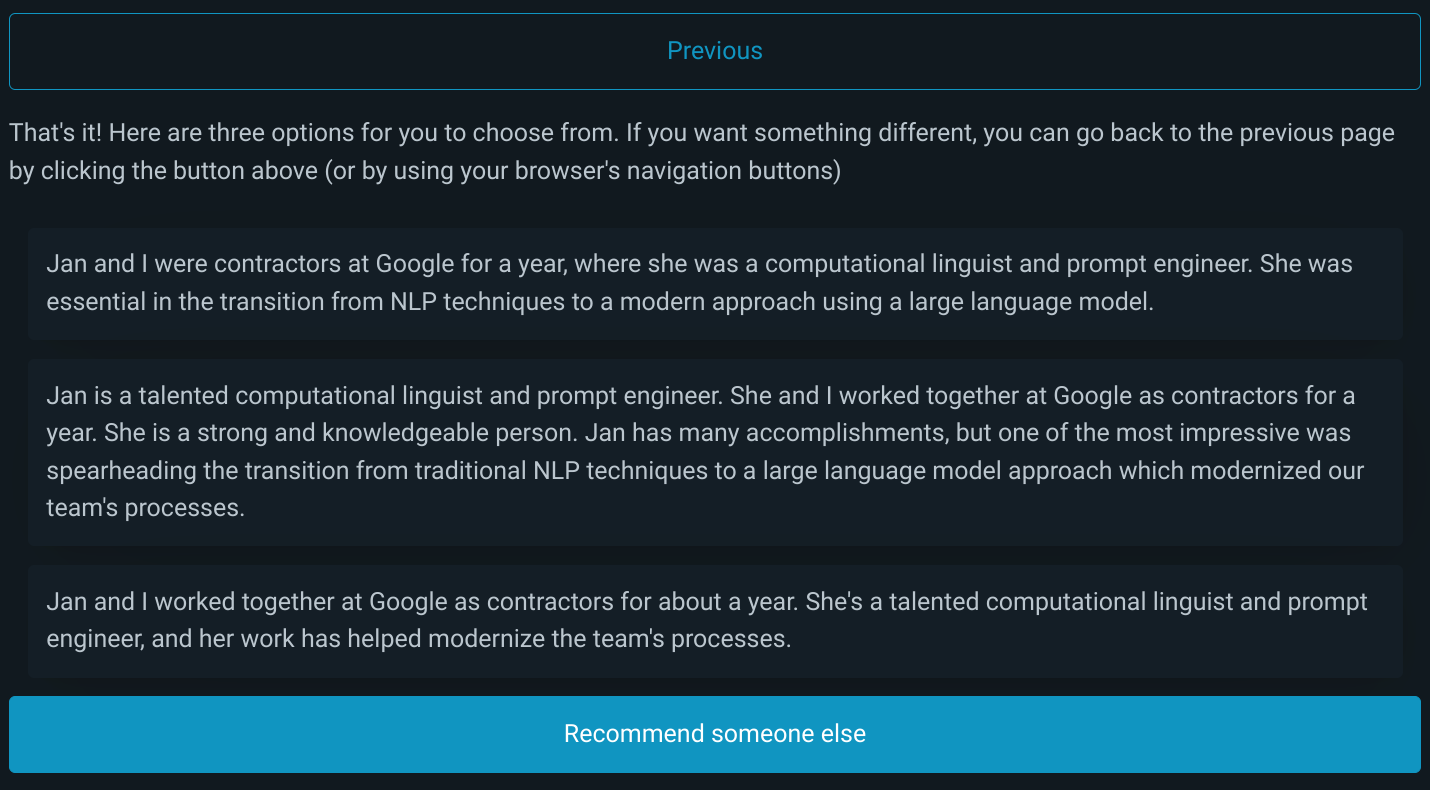

Once the user submits the form, the data from steps one and two are gathered into an engineered prompt and sent to an LLM. The user is presented with three unique options, all of which stick to the facts provided and avoid overly generic language.

If you would like to try the prototype, you can visit recommend.tarlov.dev. Please be mindful that this prototype costs me money to host. If you would like to use it frequently, please email me.